1. Intro

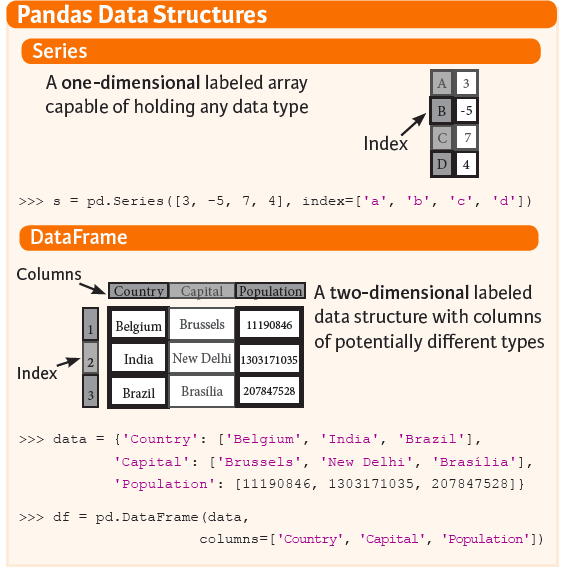

지금까지 배운 것은 데이터프레임으로 변환하는 내용이었다. 데이터프레임으로 변환한다는 뜻은 결과적으로 연구자 또는 분석 요건에 맞춰서 데이터를 가공할 줄 알아야 한다. Pandas를 배우는 궁극적인 목적이기도 하다.

혹시 데이터프레임에 관한 기본 지식이 필요한 분들은 아래 글을 방문하시기를 바란다.

https://chloevan.github.io/python/pandas/pandas_dataframe/

기본적으로 딕셔너리를 다뤘던 내용과 거의 유사하기 때문에 주의 깊게 살펴보기를 바란다.

우선, 데이터프레임을 만들도록 하겠다.

import pandas as pd

exam_dic = {'국어' : [80, 70, 90, 87], "영어" : [100, 80, 50, 90],

'수학' : [85, 75, 65, 100], "과학" : [89, 67, 90, 85]}

data = pd.DataFrame(exam_dic, index = ['Evan', 'Chloe', 'Alice', 'John'])

print(data) 국어 영어 수학 과학

Evan 80 100 85 89

Chloe 70 80 75 67

Alice 90 50 65 90

John 87 90 100 852. 열 다루기

열을 다루는 방법은 여러가지가 있는데, 대표적으로 말하면 크게 3가지가 존재한다.

- 열 선택(Selection)

- 열 추가(Addition)

- 열 삭제(Deletion)

이제 본격적으로 차례대로 하나씩 진행하도록 해본다.

2.1 열 선택

기본적으로 열을 선택할 때에는 data[' '] 열의 이름을 입력하거나 또는 dot (.) 를 활용한 방법(ex: data.열이름) 두 가지 방식이 있다. 이 때 주의해야 하는 것은 열 1개만 선택하면 시리즈로 반환한다.

data['국어']Evan 80

Chloe 70

Alice 90

John 87

Name: 국어, dtype: int64data.국어Evan 80

Chloe 70

Alice 90

John 87

Name: 국어, dtype: int64만약 2개 이상의 열을 선택하고 싶다면 data[['열이름1', '열이름2', ..., '열이름N']]와 같은 형태로 지정하면 이번에는 시리즈 객체가 아닌 데이터프레임으로 반환한다.

코드로 확인해보자.

data[['국어', '과학']] 국어 과학

Evan 80 89

Chloe 70 67

Alice 90 90

John 87 85

2.2. 열 추가

열을 추가하는 것은 엑셀을 쉽게 떠올리면 매우 쉽다. 간단하게 문법을 소개하면 data['새로운 열 이름'] = 데이터 값 와 같으며, 이 때 데이터 값을 잘 다뤄야 한다. 우선 언어영역합계, 수리영역합계라는 열 이름을 추가하도록 하겠다.

data['언어영역합계'] = data['국어'] + data['영어']

print(data) 국어 영어 수학 과학 언어영역합계

Evan 80 100 85 89 180

Chloe 70 80 75 67 150

Alice 90 50 65 90 140

John 87 90 100 85 177data['수리영역합계'] = data['수학'] + data['과학']

print(data) 국어 영어 수학 과학 언어영역합계 수리영역합계

Evan 80 100 85 89 180 174

Chloe 70 80 75 67 150 142

Alice 90 50 65 90 140 155

John 87 90 100 85 177 185이번에는 수업태도점수를 모두 10점을 준다고 가정을 하고 일괄적으로 처리하도록 해보자. 코드는 다음과 같다.

data['수업태도점수'] = 10

print(data) 국어 영어 수학 과학 언어영역합계 수리영역합계 수업태도점수

Evan 80 100 85 89 180 174 10

Chloe 70 80 75 67 150 142 10

Alice 90 50 65 90 140 155 10

John 87 90 100 85 177 185 102.3 열 삭제

열을 삭제하는 방법은 크게 3가지 방법이 있다.

- del을 사용하는 방법

- pop을 사용하는 방법

- drop을 사용하는 방법

먼저 del을 사용하는 방법이다. 먼저 수업태도점수를 삭제한다.

del data['수업태도점수']

print(data) 국어 영어 수학 과학 언어영역합계 수리영역합계

Evan 80 100 85 89 180 174

Chloe 70 80 75 67 150 142

Alice 90 50 65 90 140 155

John 87 90 100 85 177 185다음은 pop을 사용하여 수리영역합계를 삭제한다.

data.pop('수리영역합계')

print(data) 국어 영어 수학 과학 언어영역합계

Evan 80 100 85 89 180

Chloe 70 80 75 67 150

Alice 90 50 65 90 140

John 87 90 100 85 177마지막으로 drop을 사용하여 언어영역합계를 삭제한다. 다만, 이 때는 axis=1과 inplace=True라는 옵션을 사용한다. 뜻은 data객체를 변경하여 열을 삭제한다는 뜻이다. 참고로 axis=0으로 설정할 경우 행 또는 인덱스 배열을 삭제한다는 뜻이다.

data.drop('언어영역합계', axis=1, inplace=True)

print(data) 국어 영어 수학 과학

Evan 80 100 85 89

Chloe 70 80 75 67

Alice 90 50 65 90

John 87 90 100 85지금까지 데이터프레임의 열(=Column)을 다루는 가장 기본적인 과정을 다루었다. 물론, 실무에서는 고급기술이 더 많이 사용되기는 하지만, 언제나 기초가 중요하기 때문에 기본적인 함수의 사용법에 대해 익히기를 바란다.

도움이 되었기를 바랍니다.

'Python > [Python] 데이터 전처리' 카테고리의 다른 글

| [파이썬] 판다스와 함수를 활용한 데이터 가공 (0) | 2019.11.21 |

|---|---|

| [파이썬] 판다스를 활용한 행과 열 동시에 다루기 (0) | 2019.11.15 |

| [파이썬] 판다스 활용 데이터 전처리 - 행 다루기 (0) | 2019.11.12 |

| [Python] 판다스 자료 구조 - 데이터프레임 (0) | 2019.11.04 |

| [Python] 판다스 자료 구조 - Series (0) | 2019.10.30 |